Sometimes, we have clients report to us that the plugin is logging an abnormally high amount of visitor carts. Most of which are logged within seconds of each other, during a fixed number of hours in the day.

We observed the data on a few of those sites and concluded that these are not carts abandoned by actual visitors. So where did these carts come from?

These carts have been created by bots. We’ve all heard about web crawlers.

Why crawl a website?

Web crawling started as mapping the Internet and how each website was connected to each other. It was also used by search engines in order to discover and index new online pages. Web crawlers were also used to test a website’s vulnerability by testing a website and analyzing if any issue was spotted.

How does it work?

In order to crawl the web or a website, you need an entry point. Robots need to know that your website exists so they can come and have a look at it. Back in the days you would have submitted your website to search engines in order to tell them your website was online. Now you can easily build a few links to your website and Voilà you are in the loop!

Once a crawler lands on your website it analyses all your content line by line and follows each of the links you have whether they are internal or external. And so on until it lands on a page with no more links or if it encounters errors like 404, 403, 500, 503.

This means that robots will follow each of the add to cart links which you might have on your site or on affiliate sites. This is how visitor carts are created. Our plugin captures any carts that are created when the ‘Start tracking carts from the Cart page’ setting is enabled.

How do I stop it from happening?

If robots aren’t told the contrary they will crawl everything. This is where robots.txt file becomes very useful. It tells crawlers (it can be specific per crawler ie GoogleBot or MSN Bot) what pages they cannot crawl.

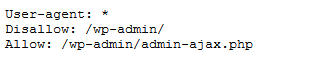

When you create a WordPress website, it automatically sets up a virtual robots.txt file located in your server’s main folder. For example, if your site is located at yourfakewebsite.com, you should be able to visit the address yourfakewebsite.com/robots.txt and see a file like this come up:

Open the file in Edit mode and add the below lines:

User-agent: * Disallow: /*add-to-cart=*

This indicates that all the bots need to the follow the rules listed below. All the add-to-cart links are not allowed and should not be crawled.

It is recommended that we also stop the Cart, Checkout and My Account pages from being crawled. This can be achieved by adding the below lines:

Disallow: /cart/ Disallow: /checkout/ Disallow: /my-account/

So, while this allows the crawlers to index your site, it stops them from crawling the add to cart links and creating unnecessary visitor carts.

Once such carts are stopped from being logged, It becomes easier for you to analyze your abandoned cart data and use the plugin to recover more orders.